【FFMPEG】ffmpeg内部组件总结

基础组件

Demuxer & Decoder

Demuxer:解复用模块,用于将各种音视频的containter中extract出音视频stream送给decoder解码。常见的container有MP4,

MOV,AVI,TS,MP3等。

Decoder:用于接收demuxer处理后音视频数据解码,常见的codec有h264,h265,vp8,vp9,aac,opus等

- Demuxer

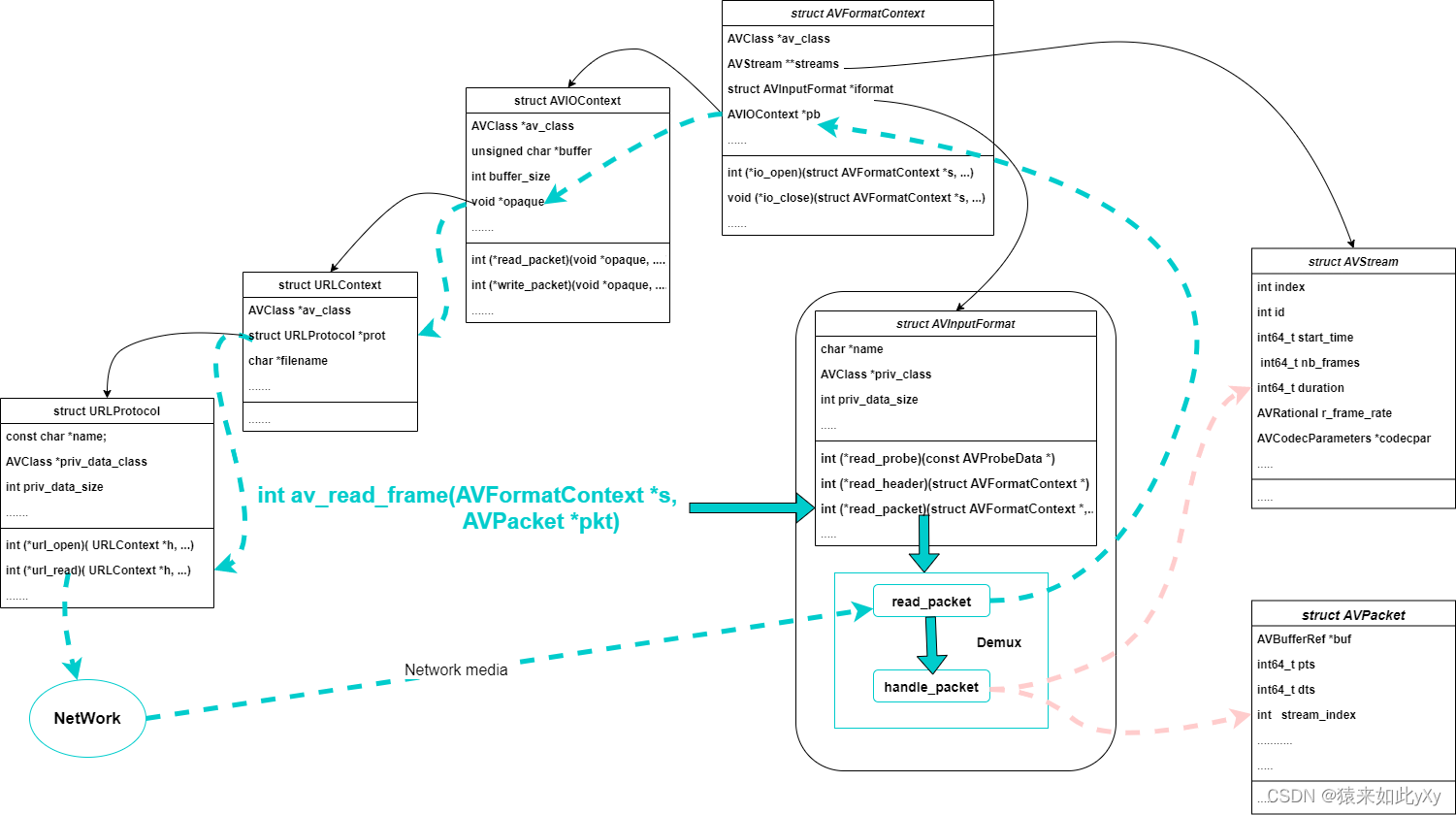

在ffmpeg中用结构体struct AVFormatContext描述所有的音视频格式,它其中的成员struct AVInputFormat *iformat就是用于描述demuxer。struct AVFormatContext中还有个很重要的成员AVIOContext *pb,这是个IO模块,它根据输入url匹配到对应的IO protocol读取或者保存数据。

struct AVFormatContext

{const AVClass *av_class;const struct AVInputFormat *iformat; //描述demuxerconst struct AVOutputFormat *oformat; //描述muxer.................;AVIOContext *pb; //描述IO模块..................;unsigned int nb_streams;AVStream **streams;//extract之后每一路音视频的信息描述...................;//通过输入的url初始化AVIOContext并找到对应的URLProtocalint (*io_open)(struct AVFormatContext *s, AVIOContext **pb, const char *url,int flags, AVDictionary **options);void (*io_close)(struct AVFormatContext *s, AVIOContext *pb);...................;

}

typedef struct AVInputFormat {const char *name;const struct AVCodecTag * const *codec_tag;const AVClass *priv_class; ///< AVClass for the private contextconst char *mime_type;int raw_codec_id;int priv_data_size;........................;int (*read_probe)(const AVProbeData *);//重点函数int (*read_header)(struct AVFormatContext *);int (*read_packet)(struct AVFormatContext *, AVPacket *pkt);int (*read_close)(struct AVFormatContext *);int (*read_seek)(struct AVFormatContext *,int stream_index, int64_t timestamp, int flags);..............................;

} AVInputFormat;

那么如何使用demuxer呢 ?

char *url = "/data/music/test.mp4";

//分配一个AVFormatContext

AVFormatContext *format_ctx = avformat_alloc_context();

//1.根据url后缀匹配到对应的demuxer,mp4对应的是ff_mov_demuxer

//2.根据url类型找到对应的url protocol,本例是本地文件对应的是ff_file_protocol

// 并初始化好IO模块AVIOContext

//3.调用demuxer模块的read_header函数extract出当contain中audio/video info

// 存放在AVFormatContext中的AVStream **streams

avformat_open_input(&format_ctx, url, NULL, NULL);//将在AVFormatContext中的AVStream **streams中的codec信息去匹配对应的decoder,

avformat_find_stream_info(format_ctx, NULL));//循环从demuxer中读取数据

while(!is_eof) {AVPacket *pkt;av_read_frame(format_ctx, pkt);

}

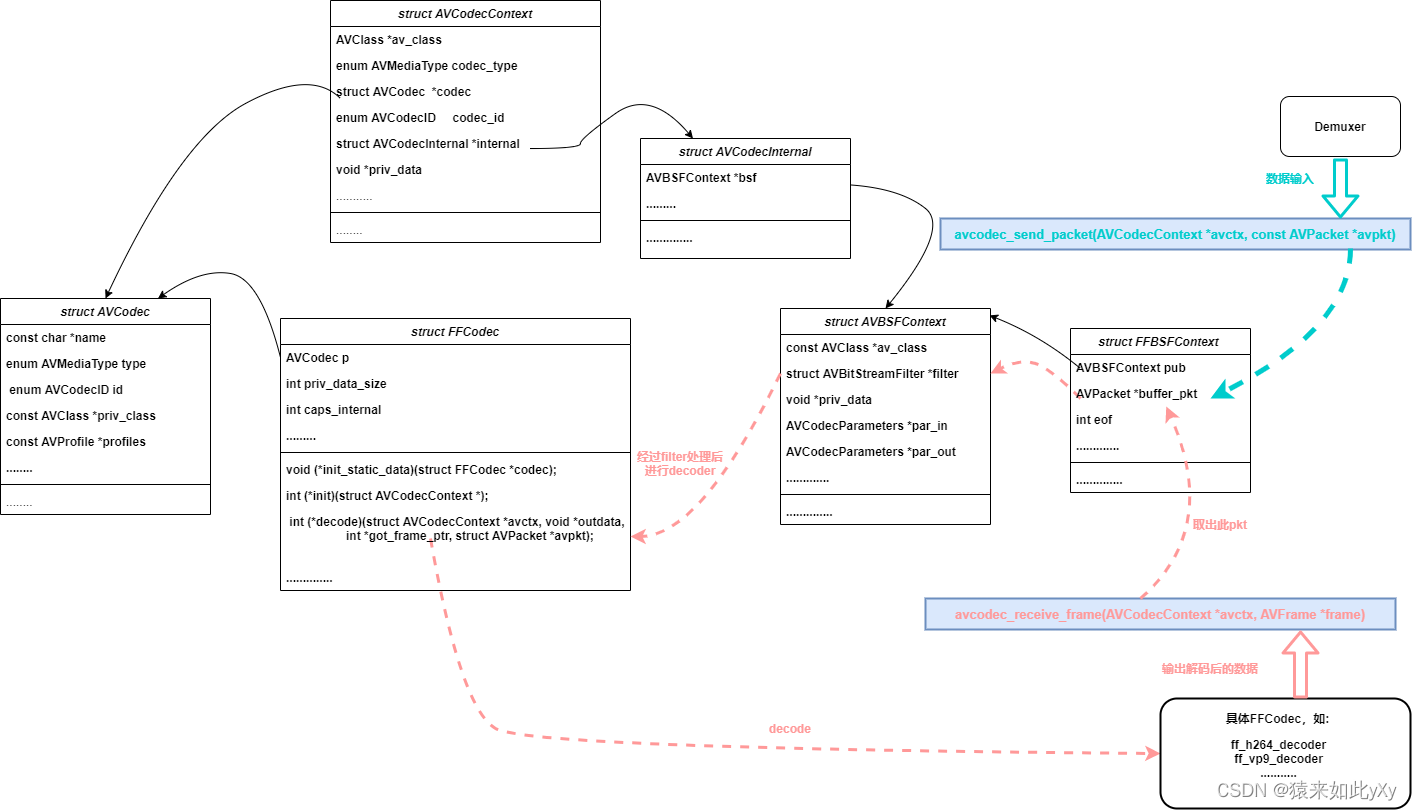

- Decoder

在ffmpeg中struct AVCodecContext来描述decoder/encoder的上下文,其成员struct AVCodec *codec为具体的codec实体,struct AVCodec对应struct FFCodec,FFCodec中实现具体的decode函数。

struct AVCodecContext {const AVClass *av_class;int log_level_offset;enum AVMediaType codec_type; /* see AVMEDIA_TYPE_xxx */const struct AVCodec *codec;enum AVCodecID codec_id; /* see AV_CODEC_ID_xxx */struct AVCodecInternal *internal;............;

}typedef struct AVCodec {const char *name;const char *long_name;enum AVMediaType type;enum AVCodecID id;.........................;const AVClass *priv_class; ///< AVClass for the private contextconst AVProfile *profiles; ///< array of recognized profiles, or NULL if unknown, array is terminated by {FF_PROFILE_UNKNOWN}..........................;

} AVCodec;struct FFCodec {AVCodec p;....................;const FFCodecDefault *defaults;void (*init_static_data)(struct FFCodec *codec);int (*init)(struct AVCodecContext *);union {int (*decode)(struct AVCodecContext *avctx, struct AVFrame *frame,int *got_frame_ptr, struct AVPacket *avpkt);int (*decode_sub)(struct AVCodecContext *avctx, struct AVSubtitle *sub,int *got_frame_ptr, const struct AVPacket *avpkt);int (*receive_frame)(struct AVCodecContext *avctx, struct AVFrame *frame);int (*encode)(struct AVCodecContext *avctx, struct AVPacket *avpkt,const struct AVFrame *frame, int *got_packet_ptr);int (*encode_sub)(struct AVCodecContext *avctx, uint8_t *buf,int (*receive_packet)(struct AVCodecContext *avctx, struct AVPacket *avpkt);} cb;int (*close)(struct AVCodecContext *);void (*flush)(struct AVCodecContext *);.................;

} FFCodec;

如何使用decoder ?

char *url = "/data/music/test.mp4";

//分配一个AVFormatContext

AVFormatContext *format_ctx = avformat_alloc_context();

//1.根据url后缀匹配到对应的demuxer,mp4对应的是ff_mov_demuxer

//2.根据url类型找到对应的url protocol,本例是本地文件对应的是ff_ftp_protocol

// 并初始化好IO模块AVIOContext

//3.调用demuxer模块的read_header函数extract出当contain中audio/video info

// 存放在AVFormatContext中的AVStream **streams

avformat_open_input(&format_ctx, url, NULL, NULL);//将在AVFormatContext中的AVStream **streams中的codec信息去匹配对应的decoder,

avformat_find_stream_info(format_ctx, NULL));//循环demuxer,decode

while(!is_eof) {AVPacket *pkt;//从demuxer中读取demux后的数据av_read_frame(format_ctx, pkt);//往decoder中送数据,即codec中avcodec_send_packet(movie->streams[pkt->stream_index].codec_ctx, NULL);AVFrame *frame;frame = av_frame_alloc();//从decoder中读取解码后的数据avcodec_receive_frame(movie->streams[index].codec_ctx, frame);

}

大致框图如下:

Encoder & Muxer

Encoder:编码模块,将原始video yuv数据或者audio pcm数据按照codec规范压缩数据,常见的codec有h264,h265,vp8,vp9,aac,opus等

Muxer:将压缩过后的audio/video数据按照具体container格式生成具体的媒体格式文件,常见的有MP4,AVI,MOV,TS等。

struct AVFormatContext描述所有的音视频格式,它其中的成员struct AVOutputFormat *oformat用于muxer,而encoder是和decoder一样都用struct AVCodecContext中struct AVCodec和Struct FFCodec。具体定义不再赘述。

那么如何使用encoder和muxer呢 ?

char *filename = "/data/record/test.mp4"

AVFormatContext *format_ctx = NULL;

//通过输入url找到对应的muxer即format_ctx->oformat并malloc AVFormatContext

format_ctx = avformat_alloc_output_context2(&format_ctx, NULL, NULL, filename);

//通过url找到并初始化IO模块

avio_open2(&format_ctx->pb, filename, AVIO_FLAG_WRITE, NULL, NULL);

//通过codec找到对应的encoder

AVCodec *enc = avcodec_find_encoder(format_ctx->oformat->video_codec);

AVCodecContext *enc_ctx = avcodec_alloc_context3(enc);

//打开encoder

avcodec_open2(enc_ctx, enc, NULL);

AVStream *stream = avformat_new_stream(format_ctx, enc);

avcodec_parameters_from_context(stream->codecpar, enc_ctx);while(!exit) {//将输入源的数据送到encoder中编码avcodec_send_frame(enc_ctx, frame);AVPacket *pkt = av_packet_alloc();//从encoder中获取编码后的数据avcodec_receive_packet(enc_ctx, pkt);//将编码后的数据送到muxer中av_write_frame(format_ctx, pkt);

}

Filter

FFMPEG 对AVFilter 的定义位于 libavfilter/avfilter.h,Filter一般处理音视频的裸流,可以称之为一些后期处理。比如:video 缩放,audio resample等功能。

struct AVFilterContext {const AVClass *av_class; ///< needed for av_log() and filters common optionsconst AVFilter *filter; ///< the AVFilter of which this is an instancechar *name; ///< name of this filter instanceAVFilterPad *input_pads; ///< array of input pads//用于连接其上游filterAVFilterLink **inputs; ///< array of pointers to input linksunsigned nb_inputs; ///< number of input padsAVFilterPad *output_pads; ///< array of output pads//用于连接其下游filterAVFilterLink **outputs; ///< array of pointers to output linksunsigned nb_outputs; ///< number of output pads//一般AVFilter都挂在AVFilterGraph中,AVFilterGraph用于组件完整的pipelinestruct AVFilterGraph *graph; ///< filtergraph this filter belongs to//通过send API可以给AVFilter发送命令,命名会缓存在这个que中struct AVFilterCommand *command_queue;//ready值不为0时,此AVFilter才会被执行其active函数,即为激活状态unsigned ready;.......................................;

};

typedef struct AVFilter { const char *name;const char *description;/*** List of static inputs.** NULL if there are no (static) inputs. Instances of filters with* AVFILTER_FLAG_DYNAMIC_INPUTS set may have more inputs than present in* this list.*/const AVFilterPad *inputs;/*** List of static outputs.** NULL if there are no (static) outputs. Instances of filters with* AVFILTER_FLAG_DYNAMIC_OUTPUTS set may have more outputs than present in* this list.*/const AVFilterPad *outputs;..................................;int (*init)(AVFilterContext *ctx);int (*init_dict)(AVFilterContext *ctx, AVDictionary **options);............................................;int (*process_command)(AVFilterContext *, const char *cmd, const char *arg, char *res, int res_len, int flags);/*** Filter activation function.** Called when any processing is needed from the filter, instead of any* filter_frame and request_frame on pads.** The function must examine inlinks and outlinks and perform a single* step of processing. If there is nothing to do, the function must do* nothing and not return an error. If more steps are or may be* possible, it must use ff_filter_set_ready() to schedule another* activation.*/int (*activate)(AVFilterContext *ctx);

} AVFilter;

FilterGraph

从前面的介绍可以知道ffmpeg中各个模块都是独立的。像ffmpeg中自带的工具如ffplay, ffmpeg都是额外写了一大堆逻辑将各个模块如:demuxer, decoder, filter组成一个完整的pipeline实现完整的功能,如视频播放,视频录像等。

有没有一种简单的方法直接组建一个pipelie呢?比如播放视频,能不能调用几个API就搞定了呢 ?

FilterGraph就是干这个事情的,通过一个conf文件提前将各个功能需要的filter连接起来,然后有API来解析初始化这个conf组件出pipeline,然后向pipeline中喂数据,让整个pipeline运转起来就可以了。

FilterGraph的详细概念以及conf文件的语法可以参考前面的文档:https://blog.csdn.net/weixin_38537730/article/details/129163510

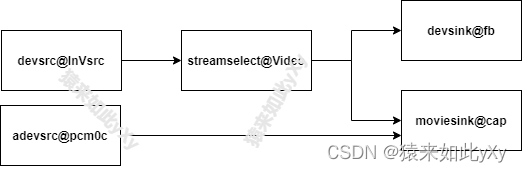

下面以一个具体的例子来了解下FilterGraph

adevsrc@pcm0c=format=alsa:devname=/dev/audio/pcm0c[a],

devsrc@InVsrc=d=/dev/video:s=1280x720:r=30[v],

[v]streamselect@Video=inputs=1:map=0 -1[vout0][vout1],

[vout0]devsink@fb=format=fbdev:devname=/dev/fb0,

[a][vout1]moviesink@cap

上面的config翻译成图表之后如下图,将各个filter链接起来。

通过上面的pipeline可以看出filterGraph将各个filter连接起来了,但是是不是很奇怪,录像肯定要encoder和muxer啊,怎么没有这些东西?其实这些东西包含在了特殊的filter中了。就是src, sink filter。

- src filter

src filter负责接收外部的数据,如:网络流,本地文件,camera数据流, mic数据流等。src filter中按照自己的需求包含demuxer,decoder。 - sink filter

sink filter负责消费数据,是pipeline的终点。sink filter按照自己的需求包含muxer和encoder。

如: moviesink filter中会根据录像文件的格式找到对应的encoder和muxer。 - 普通filter

普通的filter就是处理除了编解码,编解复用之外的某个单一功能的模块。如:aresample filter就是按照前后filter的要求将audio的sample rate进行重新处理后让下游filter得到正确sample rate的audio数据。

从上图中可以看出FilterGraph会根据前后数据的匹配度自动插入一些数据转换filter让整条pipeline的数据流能够正常工作。像这些带auto_前缀的filter如:auto_aresample, auto_scale,在conf中没有配置,是解析代码自动插入的。

如何使用操作FilterGraph ?

char *graph_desc = "/data/yxy.graph" AVFilterGraph* graph = NULL;

//1. 分配AVFilterGraph

graph = avfilter_graph_alloc();

//2. parse配置文件graph_desc

avfilter_graph_parse2(graph, graph_desc, NULL, NULL);

//3.将parse出来的filter link起来

avfilter_graph_config(graph, NULL);while(!exit) {//遍历AVFilterGraph中的所有filter,找到ready值最高的filter执行其atctive接口。ff_filter_graph_run_all(graph);

}

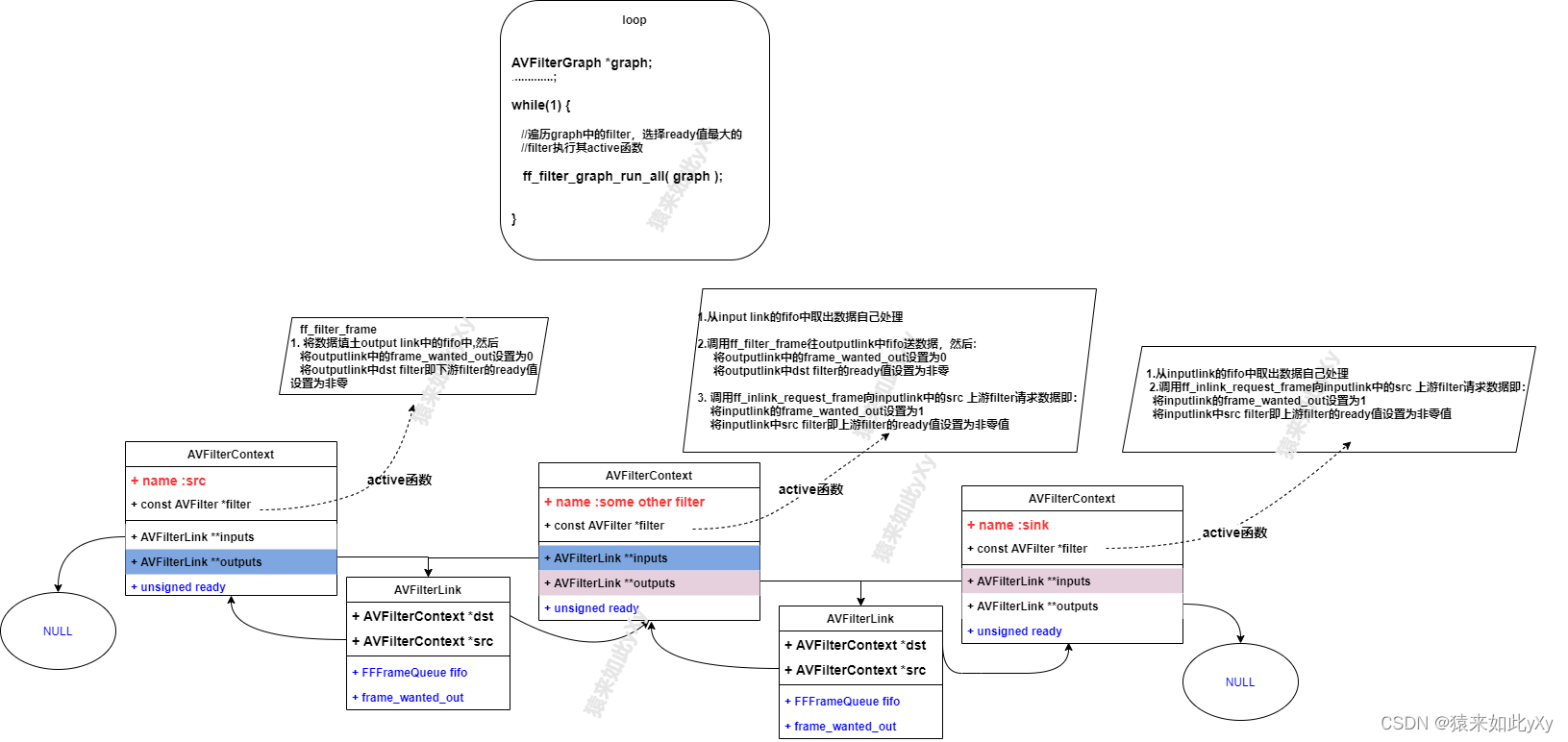

FilterGraph中数据是如何流转的?

- 当FilterGraph刚初始化好的时候,所有filter的ready值都是0.

- 上层应用代码需要主动发一个cmd给到FilterGraph中任意一个filter将其激活即将其ready值设置为非零值。

- ff_filter_graph_run_all()中将会执行上步骤中被激活的filter的active函数,此函数会激活其上游filter,依次类推直到src filter激活。

- 当src filter激活后,在其active函数中会向其下游filter link中填充数据并激活下游插件处理数据,依次类推,直到数据到达sink filter,第一笔数据就处理完了。

- 当sink filte处理完一笔数据之后,继续向上游filter请求数据,继续处理下一笔数据。

大致示意图如下: