ELK集群安装教程

文章目录

- 一、安装elasticsearch

- 二、安装logstash

- 三、安装kibana

- 四、安装filebeat

- 五、安装elasticsearch-analysis-ik

一、安装elasticsearch

-

从官网下载安装包elasticsearch、logstash、filebeat、kibana,版本尽量保持一致

elasticsearch:https://www.elastic.co/cn/downloads/past-releases#elasticsearch logstash:https://www.elastic.co/cn/downloads/past-releases#logstash filebeat:https://www.elastic.co/cn/downloads/past-releases#filebeat kibana:https://www.elastic.co/cn/downloads/past-releases#kibana -

创建用户

useradd es passwd es -

进入目录:cd /home/es

-

上传elasticsearch-7.6.2-linux-x86_64.tar.gz并且解压

-

创建目录

mkdir /home/es/elasticsearch-7.6.2/logs mkdir /home/es/elasticsearch-7.6.2/data -

修改配置文件:vi /home/es/elasticsearch-7.6.2/config/elasticsearch.yml

cluster.name: es-application node.name: master path.data: /home/es/elasticsearch-7.6.2/logs path.logs: /home/es/elasticsearch-7.6.2/data network.host: 192.168.248.10 discovery.seed_hosts: ["192.168.248.10","192.168.248.11","192.168.248.12"] cluster.initial_master_nodes: ["master"] node.master: true http.port: 9200 http.cors.enabled: true http.cors.allow-origin: "*" -

配置资源使用:vi /etc/security/limits.conf,在文件末尾增加

* soft nofile 65536 * hard nofile 131072 * soft nproc 65535 * hard nproc 65535 End of file -

配置虚拟内存大小:vi /etc/sysctl.conf

vm.max_map_count=655360 -

刷新配置:sysctl -p

-

赋权给es用户:chown -R es:es /home/es

-

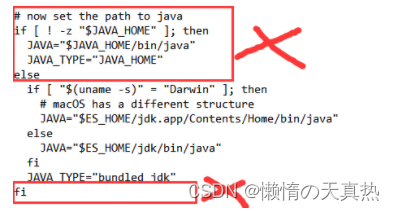

由于我本地装的是jdk8,而es运行需要jdk11,所以修改配置:vi /home/es/elasticsearch-7.6.2/bin/elasticsearch-env,删除判断

-

将虚拟机拷贝两份,作为集群,然后各自修改elasticsearch.yml配置文件即可

node.name: master network.host: 192.168.248.10 node.master: true -

启动:/home/es/elasticsearch-7.6.2/bin/elasticsearch

cd /home/es/elasticsearch-7.6.2/bin nohup ./elasticsearch & -

出现相关报错及解决办法

1)max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]

cat /proc/sys/vm/max_map_count sudo sysctl -w vm.max_map_count=262144 cat /proc/sys/vm/max_map_count2)the default discovery settings are unsuitable for production use; at least one of [discovery.seed_hosts, discovery.seed_providers, cluster.initial_master_nodes] must be configured

cluster.initial_master_nodes: ["master"]

二、安装logstash

-

上传logstash-7.6.2.tar.gz并且解压

-

修改配置文件:vi /home/es/logstash-7.6.2/config/logstash-sample.conf

# Sample Logstash configuration for creating a simple # Beats -> Logstash -> Elasticsearch pipeline.input {# 这里可以通过端口传输日志到esbeats {port => 5044}# 读取本地的日志到es# file {# path => ['/home/es/logdata/*.log']# } } filter {mutate {remove_field => [ "host" ]} } output {if [fields][filetype] == "testlog-log" {elasticsearch {hosts => ["http://192.168.248.10:9200","http://192.168.248.11:9200","http://192.168.248.12:9200"]index => "testlog-%{+YYYY.MM.dd}"}} else if [fields][filetype] == "jar-log"{elasticsearch {hosts => ["http://192.168.248.10:9200","http://192.168.248.11:9200","http://192.168.248.12:9200"]index => "jar-%{+YYYY.MM.dd}"#user => "elastic"#password => "changeme"}}else {elasticsearch {hosts => ["http://192.168.248.10:9200","http://192.168.248.11:9200","http://192.168.248.12:9200"]index => "hdfs-%{+YYYY.MM.dd}"#user => "elastic"#password => "changeme"}} } -

默认的启动堆栈是4g,如果系统配置不高可以适当减少(可忽略):vi /home/es/logstash-7.6.2/config/jvm.options.conf

-Xms400M -Xmx400M -

启动logstash:nohup /home/es/logstash-7.6.2/bin/logstash -f /home/es/logstash-7.6.2/config/logstash-sample.conf &

三、安装kibana

-

上传kibana-7.6.2-linux-x86_64.tar.gz并且解压

-

修改配置:vi /home/es/kibana-7.6.2-linux-x86_64/config/kibana.yml

server.port: 5601 server.host: "192.168.248.10" elasticsearch.hosts: ["http://192.168.248.10:9200","http://192.168.248.11:9200","http://192.168.248.12:9200"] i18n.locale: "zh-CN" -

启动kibana:nohup /home/es/kibana-7.6.2-linux-x86_64/bin/kibana &

-

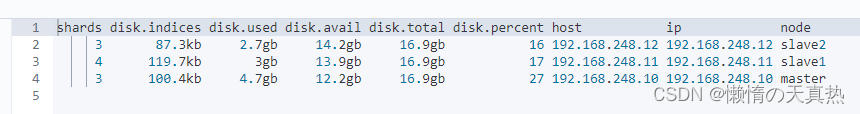

测试es集群的健康状态:get _cat/allocation?v

-

测试

1)修改logstash的logstash-sample.conf配置,修改input,然后重启

input {# 这里可以通过端口传输日志到esbeats {port => 5044}# 读取本地的日志到es# file {# path => ['/home/es/logdata/*.log']# } }2)手动修改/home/es/logdata底下的log日志

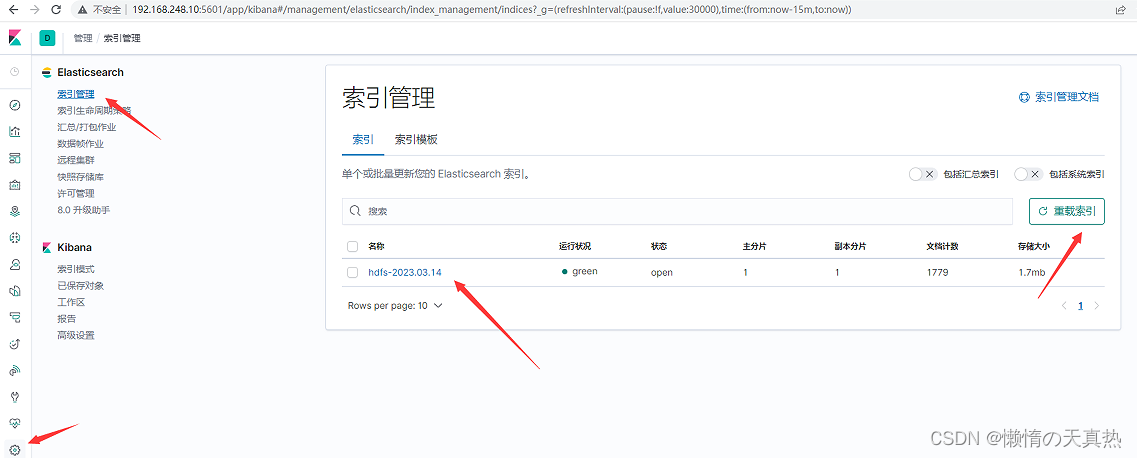

3)页面查看,说明日志读取成功

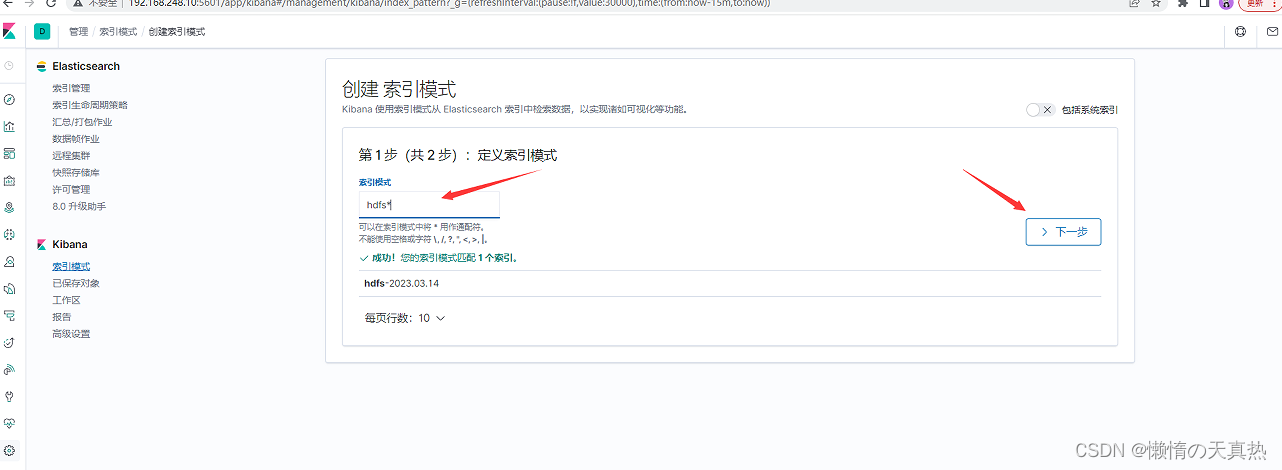

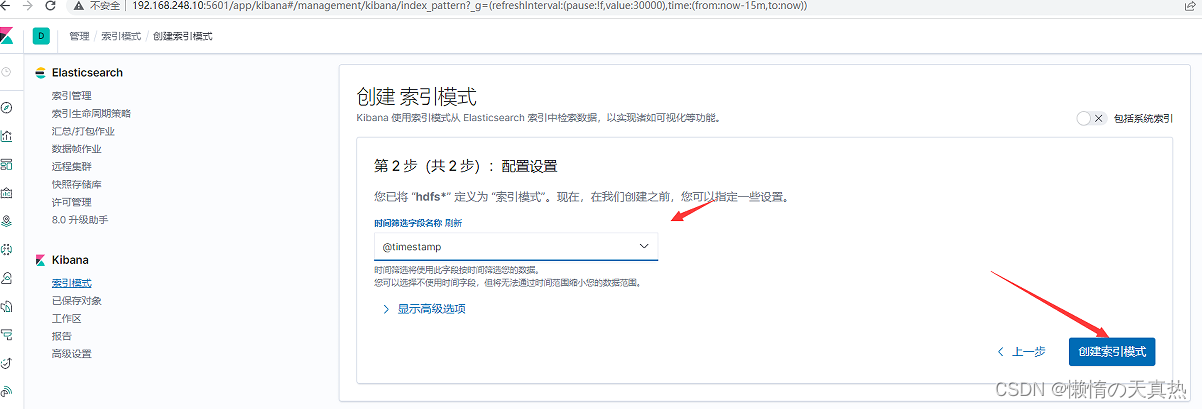

4)创建索引模式

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-u32Z2qh4-1679063685757)(C:\Users\86188\AppData\Roaming\Typora\typora-user-images\image-20230314170421440.png)]](/uploadfile/202505/a9d96bceee350d.png)

5)查看内容

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-ejuJTJQO-1679063685759)(C:\Users\86188\AppData\Roaming\Typora\typora-user-images\image-20230314171106629.png)]](/uploadfile/202505/4f9aad82a9d5c32.png)

6)测试结束,将logstash的logstash-sample.conf配置还原,重启

四、安装filebeat

-

上传kibana-7.6.2-linux-x86_64.tar.gz并且解压

-

修改配置文件:vi /home/es/filebeat-7.6.2-linux-x86_64/filebeat.yml

#输出到logstash output.logstash:hosts: ["192.168.248.10:5044"]注释以下这段 #-------------------------- Elasticsearch output ------------------------------ #output.elasticsearch:# Array of hosts to connect to.#hosts: ["localhost:9200"]# Protocol - either `http` (default) or `https`.#protocol: "https"# Authentication credentials - either API key or username/password.#api_key: "id:api_key"#username: "elastic"#password: "changeme"# 配置需要采集的日志,一般采集不同应用的日志,分开采集,统一上传到5044 # 可以跨服务,但是都需要配置filebeat # 这里可以自定义filetype,传给logstash,对日志进行分类 filebeat.inputs: - type: logenabled: truepaths:- /home/es/testlog-log/*.logfields: filetype: testlog-log - type: logenabled: truepaths:- /home/es/jar-log/*.logfields: filetype: jar-logps:需要保证logstash-sample.conf的配置

input {beats {path => 5044} } -

启动:nohup /home/es/filebeat-7.6.2-linux-x86_64/filebeat -e -c /home/es/filebeat-7.6.2-linux-x86_64/filebeat.yml &

五、安装elasticsearch-analysis-ik

-

创建文件夹:mkdir /home/es/elasticsearch-7.6.2/plugins/analysis-ik

-

本地解压elasticsearch-analysis-ik-7.6.2,上传至 /home/es/elasticsearch-7.6.2/plugins/analysis-ik下

-

重启elasticsearch

-

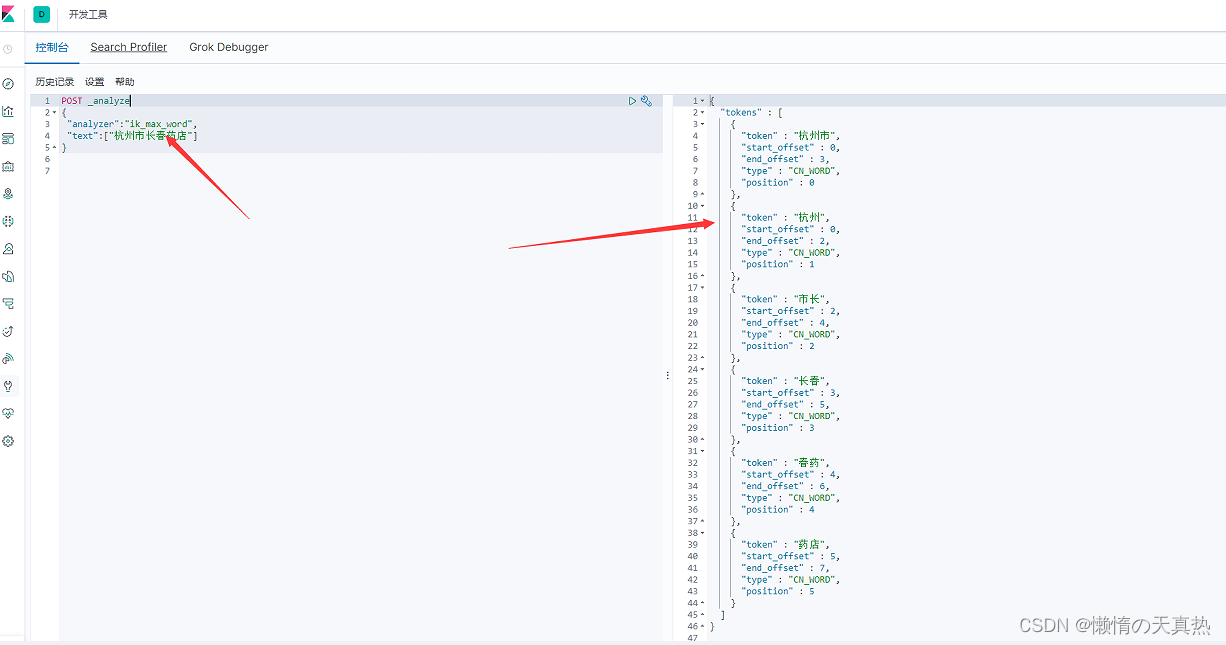

测试

POST _analyze {"analyzer":"ik_max_word","text":["杭州市长春药店"] }解析成功